Beating the Fastest Lexer Generator in Rust

group: inks@inks.tedunangst.com

tags: #compiler #perf #programming #rust #text

tags: #backend #cpp #csharp #getfedihired #jobsearch #remotework #rust #rustlang

I'm currently looking for a remote software development job

I have plenty of experience making software using all sorts of languages, frameworks and tools. Tho I have the most experience with Rust, C++, C#. I also usually do native cross platform applications and backend.

You can find my full CV on my website https://luna.graphics

Rust's very expected feature "let chain" stabilization PR for the 2024 edition was just merged! So in 12 weeks, it'll be available in the 1.88 version!

what if the poison were rust?

tags: #openbsd #rust

tags: #ai #golang #neovim #rust #rustlang #softwaredevelopment #vim #visusalstudiocode #zed

Sigh, I think I might have to switch away from #VisusalStudioCode. Seems the only stuff they work on is #AI, to the detriment of everything else.

Shall I move back to #vim? Or rather #neovim. Do I still have the patience to configure that just the way I like it?

I could also try out that newfangled #zed editor that is getting all the hype these days.

One must-have feature is it having good vim keybindings though, I'm lost without them.

Package Manager for Markdown

I'm working on a project that is intended to encourage folk to make markdown text files which can be bundled together in different bundles of text files using a package manager.

Question for coders; Which package manager would you suggest I use?

Main criterias (in order) are:

1. Easy for someone with basic command line skills to edit the file and update version numbers and add additional packages.

2. All being equal, more commonly and easy to setup is preferred.

#Markdown #CommonMark #PackageManager #Programming #Dev

#NPM #RubyGems #Cargo #PickingAMastodonInstance

tags: #development #dotnet #rust #rustlang

Always fun buying new domains... Especially when you're extremely excited about the content 🦀

Inspiration has returned, I am writing another :rust: #rust video 😁 🎉

For 2024, I solved Advent of Code in 25 different languages. Was a fun project, sometimes a bit (very) painful (*glances at Erlang and Zig*).

Code (bottom of post) and summary for each language:

https://blog.aschoch.ch/posts/aoc-2024

Keep in mind that I used most of these language for a few hours tops, so my judgement is very much subjective.

What's your favourite of the bunch?

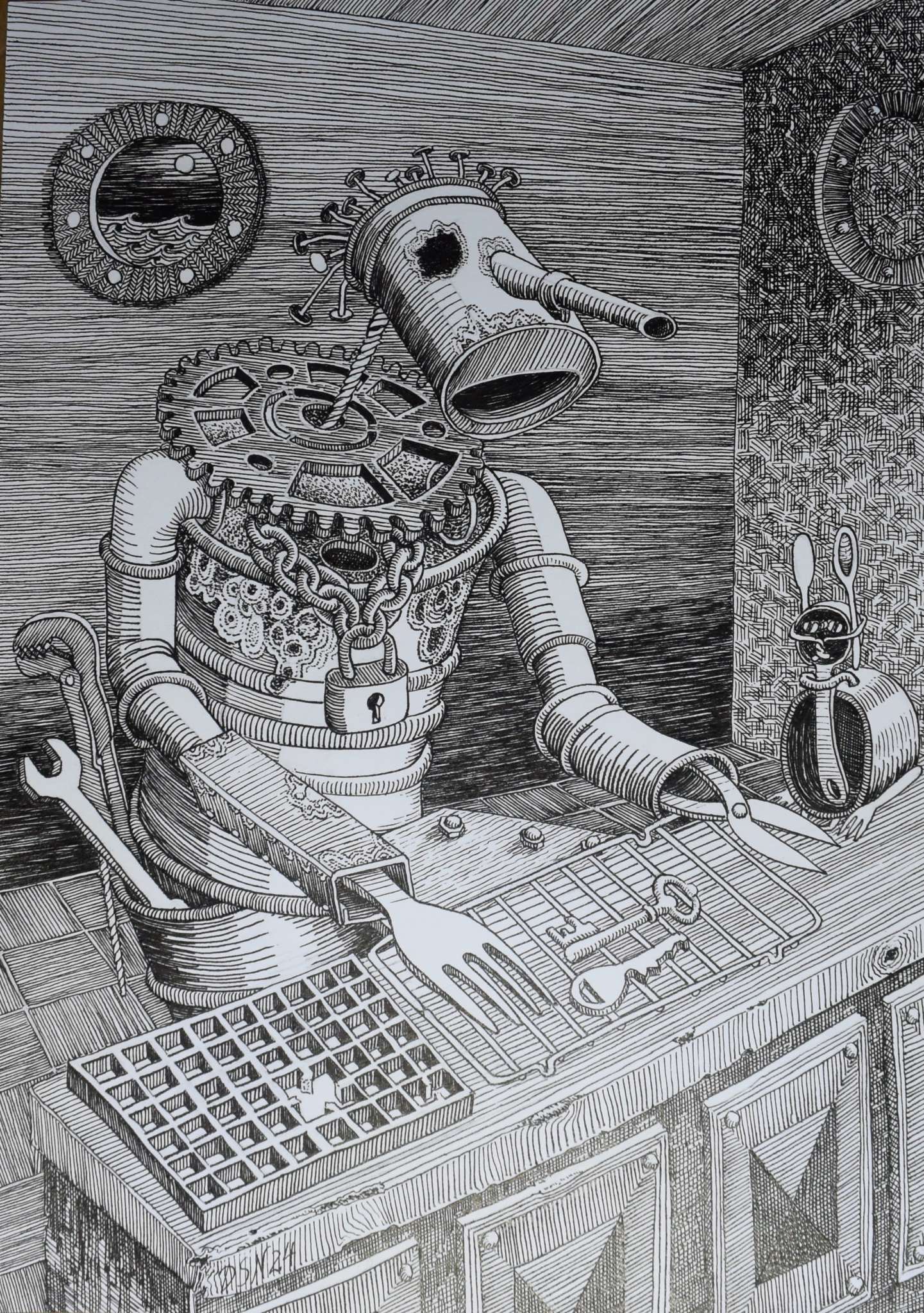

tags: #art #illustration #inktober #kleinekunstklasse #mastoart #rust

#art #mastoart #illustration #inktober #rust #kleinekunstklasse

Rostiger Nachzügler vom Inktober 2024 - Rusty straggler from Inktober 2024

#ruby #rails #development Had an app with complex list with erb partials. I never took the time to turn on #YJIT. But with the latest 3.4.1 release I made sure #rust was present on the system. It had >3x perf boost (not entirely fair, maybe some optimisations in the upgrade from 3.3.6 to 3.4.1). Perhaps I could have rewritten it to be more performant, but getting such improvements for 'free' is even better :D

Negative trait bounds or specializing would be real handy right now, but I guess I can work around it with PartialOrd. Maybe I'll make it use negative bounds with an optional feature flag thats nightly-only

fine, scalars are anything that implements PartialOrd. feels a bit arbitrary? but also very math-y. "A number is anything you can say is bigger or smaller than another of its type"

Ah, there is one downside to this abstraction: Converting a T to a Col is ambiguous. If T is a scalar like 1 I want to to become [1,1,1], but if its a vector like [1,2,3] I want it to become [[1,1,1],[2,2,2],[3,3,3]]. basically I want "splat" to always splat starting from the inner-most scalar.

Solution is just to make use of a trait that scalars are likely to implement but vectors are not. numtraits Num is a good choice because it requires Ord which vectors cant implement

Representing a matrix as a vector of vectors has some pretty cool properties

If you generalize the dot product so that can be done whenever the elements of the left and right hand side can be multiplied and summed (multiply here being Hadamard product), then matrix multiplication is just the left hand side with each element dot-producted with the right hand side

impl<L, R, const N: usize> Dot<Col<R, N>> for Col<L, N>

where

L: Mul<R, Output: Sum + Copy> + Copy,

R: Copy,

{

type Output = <L as Mul<R>>::Output;

fn dot(self, rhs: Col<R, N>) -> Self::Output {

zip(self.rows(), rhs.rows()).map(|(l, r)| l * r).sum()

}

}

impl<L: Copy, R: Copy, const N: usize> MMul<Col<R, N>> for Col<L, N>

where

Col<R, N>: Dot<L, Output: Copy>,

{

type Output = Col<<Col<R, N> as Dot<L>>::Output, N>;

fn mmul(self, rhs: Col<R, N>) -> Self::Output {

Self::Output::try_from_rows(self.rows().map(|l| rhs.dot(l))).expect("Length mismatch")

}

}

tags: #rust

to: https://mastodon.social/users/Gargron

@Gargron ah so there's a #rust jinglejam. also there's a bluegrass one lol

I'm doing a code challenge in Crystal. As someone who with a Ruby background who went on to learn Rust, you might think that it's a natural fit. However, I'm finding it to fall in the uncanny valley between the two - not as flexible as Ruby and not as expressive a type system as Rust, and the two play off of each other when I'm trying to do type gymnastics to get this very Ruby-like language to behave like Ruby.

tags: #c #rust #unix #zig

to: https://snac.bsd.cafe/modev

I'd say if you're happy with #C, there's no need to choose any second language. 🤷

Before even looking at any alternatives, the question should be "why not C". Some of the typical complaints are:

- memory safety – or, more generally, the fact that C is only partially defined, leaving a lot to the dangerous area of undefined behavior. There's no way to reliably detect undefined behavior by static code analysis, and it will often hide well at runtime. Especially errors regarding memory management often directly expose security vulnerabilities. In my experience, the risk can be reduced a lot by some good coding and design practices. Examples are avoiding magic numbers, using

sizeofeverywhere possible, preferably on expressions instead of type names, defining clear ownership of allocated objects (and, where not possible, add manual reference counting), making ownership explicit by usingconstwhere appropriate and naming your functions well, and so on. Given also there's no guarantee alternative languages will safeguard you from all the possible issues and there are also a lot of other ways to create security vulnerabilities, my take on this would be: partially valid. - programming paradigm – you can only program in classic procedural style. Well, that's simply not true. First of all, you can program whatever you want in any turing-complete language, so what we talk about shouldn't be what's directly supported by the constructs of the language, but what's practically usable. In C, using some simple OOP is commonplace. Well, you can apply OOP to assembler programming without too much hassle, so, it's not surprising you can do it in C, as it's a pretty thin abstraction on top of machine code. C helps further with its linkage rules, where everything in a compilation unit is by default inaccessible to other units, and its incomplete types, where only a type name is known, but neither its size nor inner structure, giving you almost perfect information hiding for free. If you want/need polymorphism, it gets a bit more complicated (you have to think about how you identify types and manage virtual tables for them), but still perfectly doable. You'll hit the practical limits of the language when you want to go functional. The very basics are possible through function pointers, but most concepts of functional programming can't be applied to C in a somewhat sane way. Unless that's what you need, my take would be: invalid.

- limited standard lib. Indeed, the C standard library misses lots of things that are often needed, for example generic containers like lists, hashtables, queues, etc. It's straight-forward to build them yourself, but then, there are many ways to do that (with pros and cons). You'll find tons of different implementations, many non-trivial libraries bring their own, of course this also increases the risk to run into buggy ones ... I wouldn't consider it a showstopper, but I'd mark this complaint as: valid.

Then, the next question would be: For what purpose are you looking for a second language?

For applications and services, there's already a wide range of languages used, and I'd say do what everyone does and pick what you're most comfortable with and/or think best suits the job. IOW, it makes little sense to ask what would be "the future", there have always been many different languages used, just pick one that's unlikely to quickly disappear again, so you'd have to restart from scratch.

For systems programming "the future" has been C for many decades 😏 ... some people want to change that, actually for good reasons. IMHO, the current push for #Rust (I don't see anything similar regarding #Zig yet?) into established operating systems is dangerous. An operating system is very different from individual apps and services. It's required by these as a reliable and stable (in terms of no breaking changes) platform. It's complex and evolves over an extremely long period of time. It needs to interface with all sorts of hardware using all sorts of machine mechanisms (PIO, DMA, MMIO, etc pp). I conclude it needs a very stable, proven and durable programming language. You'll find C code from the 30 years ago that just still builds and works. IMHO a key factor is that there's an international standard for the C language, governed by a standards body that isn't controlled by any individual group. And correlated to that, there are many different implementations of the language. Before considering any different language in core areas of an established operating system, I'd like to see similar for that new language.

Now, C was developed more or less together with #Unix, so back then, nobody knew it would be such a success, of course there was no standard yet. But then, I think this is the sane way to introduce a new systems programming language: Use it in a new (research?) OS.

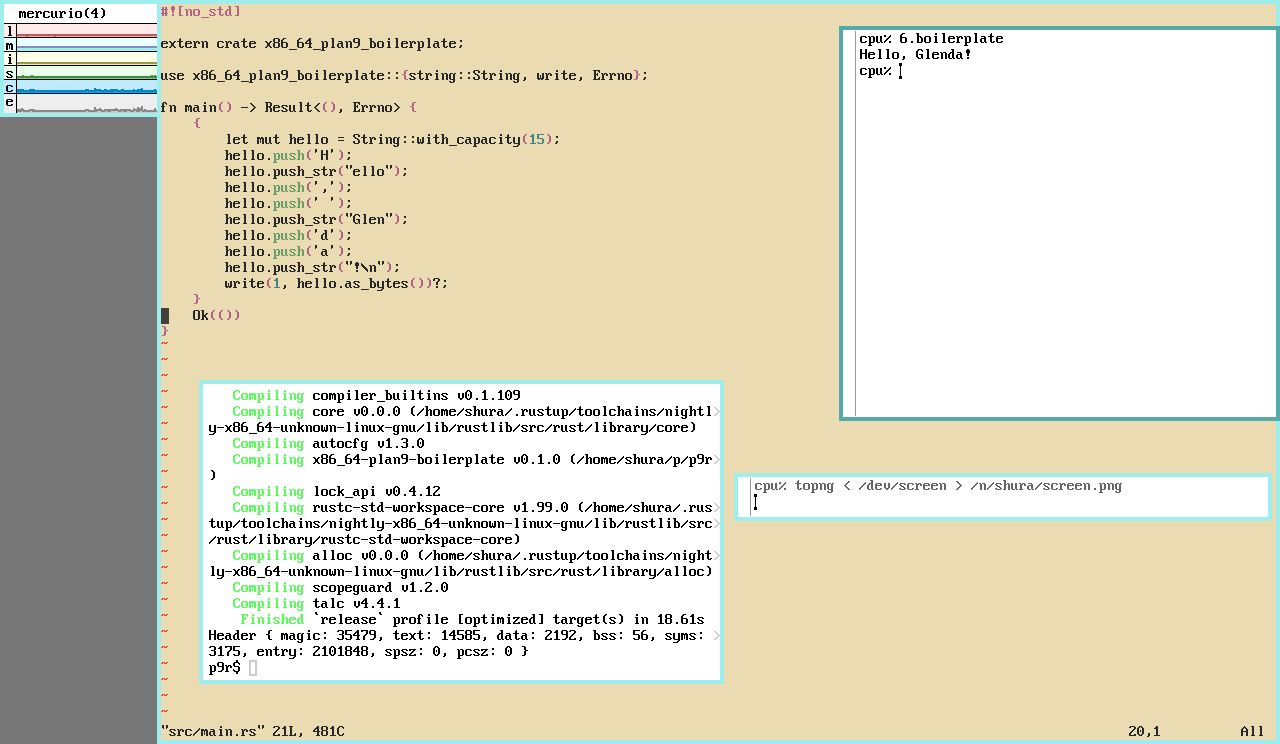

Rust, but it's on Plan9.